Genome centres combine forces to validate a gene set for biomedical research

This work addresses the fact that often, the genes listed in human genome databases are not entirely validated, and genes may have different names in different databases. Since the data characterizing the genes comes from a variety of sources, researchers may need to question whether a listed gene is real and if its stated function is accurate.

“At Ensembl we have been continuously improving our gene prediction methods, and the CCDS collaboration provides the next step in both accuracy and stability of gene structures, through a process called ‘curation’. For high-investment gene sets, having three groups independently verify gene structures in this collaborative manner will provide the world with the highest possible quality set.”

Ewan Birney Head of the Ensembl team at the EBI

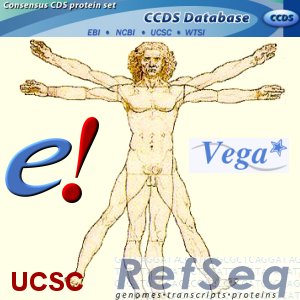

After more than a year of work, the collaboration has released a set of 14,795 genes that have been carefully examined and accurately characterized. This gene set, called the CCDS set (for ) was posted today on three internet sites: the Ensembl Browser at the EBI and the Wellcome Trust Sanger Institute, the UCSC Genome Browser and the NCBI RefSeq website.

The CCDS genes have been given unique identifier and version numbers to help locate them on genome maps. Each site will receive regular updates as the collaboration continues to refine its knowledge of the protein-coding genes.

“Resolving inconsistencies between gene structures generated by complementary methods of manual curation from the Havana and RefSeq groups and automatic annotation from Ensembl and NCBI is a major step towards providing stable and accurate annotation that can be relied on by researchers.”

Tim Hubbard Head of Human Genome Analysis at Wellcome Trust Sanger Institute

Finding genes in large genomes such as the human genome is an extremely difficult task, involving complex computer and manual analysis. This process of ‘annotation’ is complicated by the fact that only some 2 per cent of the human genome is thought to code for protein.

Different genome centres used different methods to make gene predictions and to verify those predictions. Inevitably, the three systems saw some anomalies in gene naming, location or structure. The new collaboration is designed to provide the best of the best of those results.

“Now that biomedical science has an internationally accepted reference human genome to work from, its time to identify a corresponding reference set of human genes from that genome.”

David Haussler A Howard Hughes Medical Institute Investigator from UC Santa Cruz

The sources of the gene structure information are a combination of automatic and curated genes. The main curation groups are the Havana team at the Wellcome Trust Sanger Institute and the RefSeq annotation group at NCBI. In addition, manually curated information on chromosome 14 (Genoscope) and chromosome 7 (Washington University, St Louis) has been brought in via the Vega resource.

The automatic methods are provided by the Ensembl group and the computational pipeline of RefSeq. Curated information is favoured over any automated information and the information has to be both consistent in the Hinxton (Vega/Ensembl) and NCBI groups and also pass the stringent quality controls applied by UCSC.

Even with huge dedicated computer power, the cataloguing of human genes remains a severe challenge.

“Finding all the genes in the DNA sequence of the human genome has proven to be much more difficult than we ever imagined. It will take the coordinated efforts of experimentalists and computational biologists many more years to complete this task.”

David Haussler A Howard Hughes Medical Institute Investigator from UC Santa Cruz

According to Mark Diekhans, a member of the UCSC Genome Bioinformatics Group, the human element has been critical in this project, applying ‘a lot of gut-level filters’ to the data. The collaborative team used a conservative process.

“We were going for high quality and high confidence. When in doubt about a gene, we left it out of our set. This makes the CCDS a valuable reference set for disease research.”

Mark Diekhans A member of the UCSC Genome Bioinformatics Group

“All participants have found the comparison and data exchange process constructive towards improving their own genome annotations and their methods.”

Richard Durbin Head of the informatics division at the Wellcome Trust Sanger Institute

More information

Selected websites

The Wellcome Trust Sanger Institute

The Wellcome Trust Sanger Institute, which receives the majority of its funding from the Wellcome Trust, was founded in 1992. The Institute is responsible for the completion of the sequence of approximately one-third of the human genome as well as genomes of model organisms and more than 90 pathogen genomes. In October 2006, new funding was awarded by the Wellcome Trust to exploit the wealth of genome data now available to answer important questions about health and disease.

The Wellcome Trust and Its Founder

The Wellcome Trust is the most diverse biomedical research charity in the world, spending about £450 million every year both in the UK and internationally to support and promote research that will improve the health of humans and animals. The Trust was established under the will of Sir Henry Wellcome, and is funded from a private endowment, which is managed with long-term stability and growth in mind.